Translated from Japanese. Original source

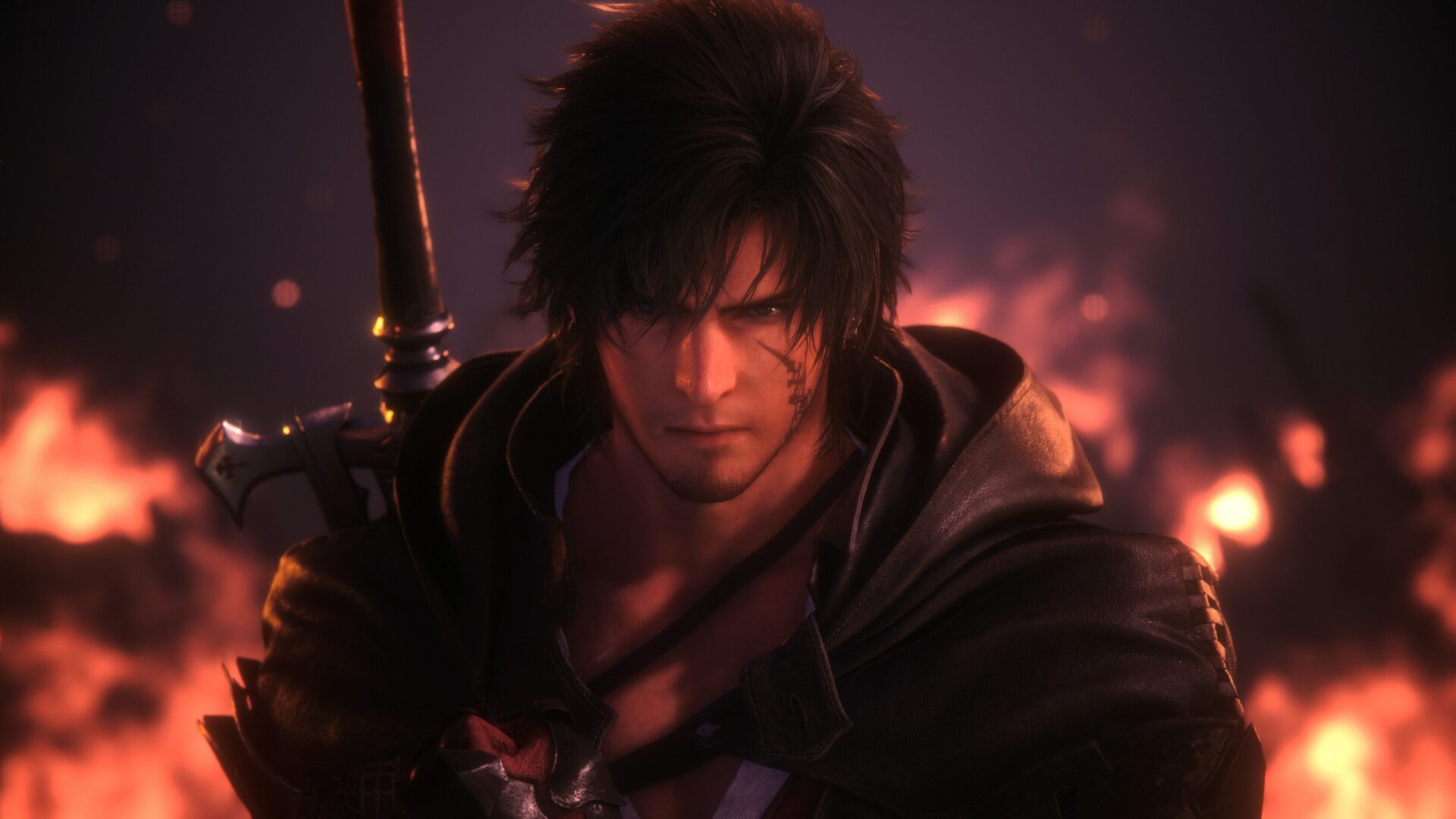

Trailer for FINAL FANTASY XVI

FINAL FANTASY XVI (FF16) was released on Thursday, June 22, 2023, as the first full-action RPG in the beloved series. The latest numbered title combines a captivating story, stunning graphics, and exciting action gameplay elements. The development team went above and beyond to create visually stunning cutscenes to give players an enthralling theatrical experience. But creating this epic game that would go on to be played by millions was not without its challenges. The artists responsible for crafting these incredible cutscenes were met with several obstacles, driving them to experiment with new techniques to refine the overall quality and meet the demands of their dedicated fanbase. We had the opportunity to talk with Eitaro Iwabuchi (Lead Cinematic Technical Artist), Atsushi Sakamoto (Cinematic Technical Artist), Takeo Suzuki (Cinematic Manager), Yuki Sawada (Lead Cutscene Motion Artist), and Akihito Higashikawa (Lead Rigging Artist) to learn about their journey creating the smash hit game.

© SQUARE ENIX

The team Final Fantasy XVI used Autodesk Maya, MotionBuilder and Flow Production Tracking (formerly ShotGrid) to push the bar on their visuals.

SQUARE ENIX and Autodesk collaborated to push the boundaries of game development

“There are employees who take paid time off to play FF16.”– X User

During the summer of 2023, it was common to see adults in Japan making statements like the one above on social media. This is a testament to the significance of entertainment in modern Japan. Millions of people had been eagerly awaiting the release of FF16.

The first “Final Fantasy” was released in 1987, and “FINAL FANTASY VII,” released in 1997, changed the world by shifting the setting from 2D to 3D. The game also gained attention for its significant impact on playing and producing games. In fact, playing the Final Fantasy series in its entirety is a good crash course on the evolution and history of 3D CG in games. The Final Fantasy series celebrates its 36th anniversary this year.

One reason the franchise has won the hearts of its fans is its movie-like cutscenes. Since the series’ inception, SQUARE ENIX has been focused on creating captivating stories, unique worlds, and impressive visuals.

Using Autodesk’s tools and development environment, SQUARE ENIX is building new extensions to improve graphics and the overall experience. Currently, the Final Fantasy series is developed using Maya, MotionBuilder, and Flow Production Tracking (formerly ShotGrid).

© SQUARE ENIX

The development team behind FF16 took on various challenges to deliver a visually stunning and immersive experience for fans. They aimed to achieve higher aesthetics and richness than previous series installments. In addition to captivating fans with its breathtaking cinematic cutscenes, FF16 also sought to deliver a compelling story complete with engaging drama.

Lead Cutscene Motion Artist, Yuki Sawada

The development team wanted to create facial expressions and animations that enchant players. To reproduce the facial muscles’ vivid and delicate human-like movements, the rigging team maintained close contact with the animation team, starting from the early stages of development. Yuki Sawada, a Lead Cutscene Motion Artist, emphasized the importance of creating realistic facial expressions: “Motion capture technology is important, but you can’t create good facial expressions unless you have a good rig. To reproduce the vivid facial expressions of human beings, including even the slightest movements of the pupils or eyelids, we cooperated closely with the rigging team to investigate facial expressions and movements thoroughly.”

The rigging team focused on supporting the animators in creating the desired facial expressions and movements. They actively consulted with the animation team and listened to various perspectives, allowing for seamless collaboration. Lead rigging artist Akihito Higashikawa explains, “As a result, in the early stages of development, we felt that we already had the means and feedback to meet their wishes.”

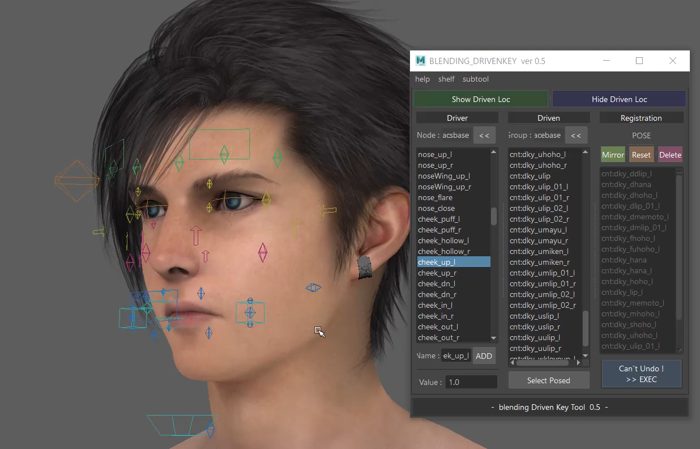

To ensure seamless workflow and comfort for the animators, Mr. Higashikawa chose to build the rig based on the widely adopted FACS (Facial Action Coding System), which they were already familiar with and used on a daily basis. Some poses that are not in FACS have also been registered. The rigging team prioritized the ease of use for animators and selected facial rigs accordingly. They dedicated their efforts to finding ways to assist them in creating the desired expressions in a stress-free environment, fully leveraging their technical expertise and imagination.

The team at SQUARE ENIX developed a tool to control the facial expressions of characters in Maya based on FACS.

“Using FACS as a base, we reflected the movements of the facial muscles onto the screen. Fine muscle movements and the nuances of facial expressions will become “linear movements” if they are used as they are in FACS, so how can they be adjusted so that they become natural movements? We tried different ways to incorporate it into the rig so that it didn’t give a linear impression.” This underscores the team’s conviction in their capabilities to achieve the targeted facial expressions and movements using the existing resources and feedback.

Lead Rigging Artist, Akihito Higashikawa

The “linear impression,” Mr. Higashikawa explains, “gives an unnatural impression because it moves in a straight line from shape A to shape B.” This was necessary to eliminate the linear impression by supplementing the movement between poses registered in FACS so that the movement between poses was more delicate and realistic. To briefly explain the method, they first register a pose based on FACS in the pose registration tool. For the delicate movements in preparing the bones and transitioning to each pose, the animation curves of the driven keys are adjusted to eliminate the linear impression. In addition, the “Layered Texture Node” blends the poses so that they overlap layers, and even if Pose A and Pose B are performed simultaneously, the desired movement can be set in the facial rig so that it blends in beautifully. As a result, the poses do not interfere with each other, and delicate muscle movements can be created.

The game also showcases the graceful swaying and softness of clothing. To achieve this, SQUARE ENIX utilized their renowned physics engine, “Bonamik,” in combination with the auxiliary bone system known as “KineDriver.” Despite Bonamik’s impressive capabilities, expressing intricate swaying and interaction, especially with double-layered skirts, posed challenges. Lead rigging artist Higashikawa explained their approach: “Using Maya, I established a driven key at the foundation of the swaying motion and auxiliary bone, utilizing KineDriver to implement these changes in the actual character model. We achieved a visually stunning swaying sensation through trial and error in Maya to address polygon clipping issues, followed by reflecting those adjustments onto the in-game model. This workflow proved to be highly efficient.”

*1 Bonamik: A bone-based simulation solver developed by the company’s Technology Promotion Department. Bones can be connected in tandem and in parallel to simulate cloth for example.

*2 KineDriver: A system developed by the company’s Technology Promotion Department that uses auxiliary bones to correct shapes

The game utilizes two types of rigs to achieve the desired character movements: the “primary rig” and the “secondary rig.” The primary rig is responsible for basic body movements, while the secondary rig is dedicated to more delicate actions, such as swaying clothes and hair. When testing these rigs on the actual machine, if the movement doesn’t meet expectations or if any clipping issues arise, the team focuses on refining it using the secondary rig. This involves retargeting the animation results, obtained through FBX files, to the secondary rig for further adjustments.

YouTube: Primary vs. Secondary Comparison | Final Fantasy XVI – YouTube

Whether it’s “Bonamik” or “KineDriver,” SQUARE ENIX’s technology department has immense trust in Autodesk solutions. Eitaro Iwabuchi, Lead Cinematic Technical Artist, says that Maya’s high scalability sets it apart from other DCC tools: “Default functions are just not good enough, and there are limitations due to user error. In order to limit data mistakes, we also added a work data checker on Maya. I appreciate that we can provide our artists a comfortable and efficient environment by adding the functions we developed on top of Maya in this manner. Maya is so powerful.”

Lead Cinematic Technical Artist, Eitaro Iwabuchi

Enhancing Project Efficiency with Maya and MotionBuilder

In this project, some artists preferred working in Maya, while others preferred MotionBuilder. This clear division in preferences prompted the development of a pipeline focused on seamlessly transferring MotionBuilder data to the game engine through Maya, utilizing the “Send To Maya” functionality. Furthermore, specific tasks can only be performed in Maya due to its extensive SDK . Mr. Iwabuchi introduced the process by explaining, how they integrated the procedure of resolving issues identified through Maya’s “checker” tool and exporting them to the game engine.

Technical Artist, Atsushi Sakamoto

Mr. Iwabuchi and cinematic Technical Artist, Atsushi Sakamoto added a checker in Maya. This tool automatically checks for errors or omissions in the work data after the work has been completed. By performing this check and then sending it to the post-processes, bugs can be avoided in the game engine. The cutscene checker collectively checks frame rate numbers, naming conventions, and human errors, such as inputting the wrong keys when adding character positions and movements. “It’s something that can be confirmed with the human eye, but when it comes to a large-scale title like this game, there are many characters that make an appearance, and this kind of flow of centrally managing and accurately finding errors may be unspectacular, but is essential,” says Sakamoto.

According to Mr. Iwabuchi, the decision to integrate MotionBuilder stemmed from their exploration of approaches in response to the artists’ persistent requests: “The artists expressed a strong desire to have the option of using MotionBuilder. They meticulously arrange camera positions during the layout phase to ensure seamless shot transitions. This necessitates high playback speeds and seamless connections. MotionBuilder is loved because of its exceptional playback capabilities.”

The Flow of Cutscene Production to Animation

In the “animatics” stage, a simple 3D model is used, and rough movements are added with MotionBuilder or Maya to create a starting point for motion capture shooting. “We performed motion capture using only storyboards when the characters and assets weren’t finished, but after the characters and assets were completed, we created as many animatics as possible before recording,” explained Cinematic Manager Takeo Suzuki. “Our task was to place objects one after another in the scene to determine the camera angle roughly. We can do this crisply and without stress with MotionBuilder, which boasts high processing speed.”

Cinematic Manager, Takeo Suzuki

“In addition, at the motion capture shooting location, confirmation work is performed where the actors’ movements are inserted into the background laid out in MotionBuilder in real-time. Before shooting, we determine the camera positions and cut structures using storyboards and animatics. Then, we can check or adjust the detailed renditions that occur when the actors perform on set, the actors’ preferences, and the connections between the performances on the spot. Real-time confirmation is an indispensable process in terms of budget management because it allows for more accurate and high-quality movements and avoids the risk of reshooting.”

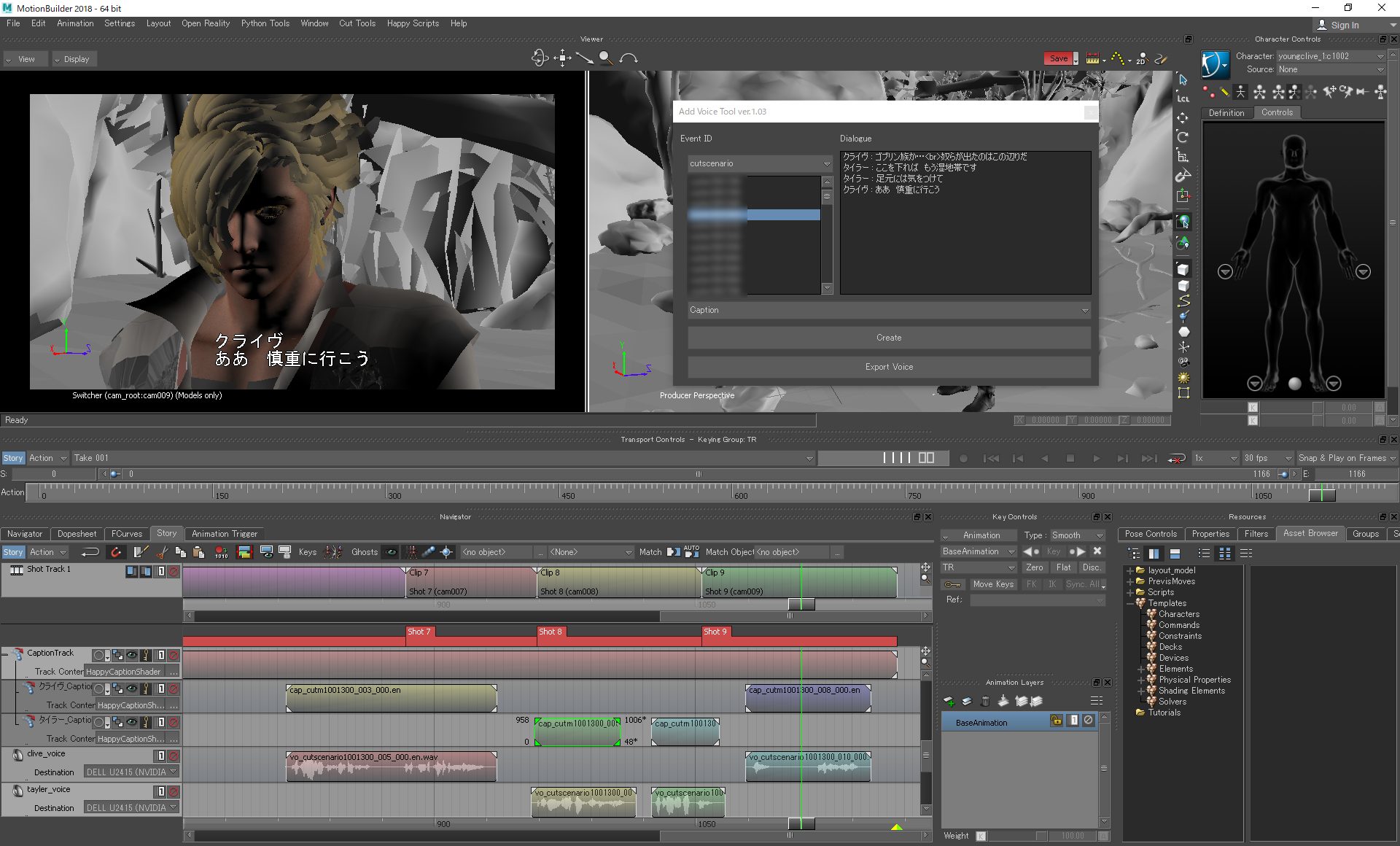

Voice editing work in MotionBuilder

MotionBuilder helps adjust voice timings, which used to be a time-consuming process. Previously, the voices were first placed in MotionBuilder, then rearranged when transcribed in Premiere Pro for a supervisory check, and finally, the voice was recognized again when it was transferred to the game engine. It was a tedious task that took a lot of time. However, to make this process more efficient, a feature called “Add Voice” was developed by Mr. Sakamoto. This feature allowed the voice placement data in MotionBuilder to be exported and used in Premiere Pro to create a check video. Additionally, the exact placement data could be brought into the game engine, making the task that needed to be done three times before, only require to be done once. This allowed the teams to focus on the quality of the story instead of wasting time on repetitive tasks.

Technological innovation in entertainment has allowed us to immerse ourselves in experiences beyond our imagination. The advent of CG in the realm of visual media has led to an exponential expansion in the range and quality of visuals. Paired with its compatibility with various avant-garde technologies, CG is perceived to hold a vast amount of untapped potential. SQUARE ENIX plans to explore further projects, armed with Autodesk’s technology and their proprietary tools, pushing the boundaries of what’s possible.

Watch the GDC 2024 Autodesk Developer Summit talk with SQUARE ENIX.