In this chat with Lemon Sky Studios, the team walks you through how they tackled the creative workflows behind their in-house Metal Genesis game and the Playdate with Winnie the Pooh animated series. They talk about the tools they rely on, why open standards matter to them, and what they think really makes a studio thrive.

Q: Tell us about Lemon Sky Studios.

A: Lemon Sky Studios is a leading game and animation studio in Southeast Asia, known for delivering high-end CGI and cutting-edge content for the global entertainment industry. Its technical expertise shines through in projects like Metal Genesis and Playdate with Winnie the Pooh, two productions that demonstrate the studio’s versatility across both realistic and stylized art directions.

Metal Genesis pushes the limits of real-time cinematic production. Built with Autodesk’s Media & Entertainment Collection, the project used Autodesk Maya for modeling, rigging, and animation, while motion capture data processed in Autodesk MotionBuilder brought characters to life with seamless precision. Rendered entirely in Unreal Engine, the cinematic leveraged real-time workflows for rapid iteration, dynamic lighting, and film-quality visuals. The team also explored real-time look development and sequencer-based cinematography to elevate the stylized aesthetic and fast-paced storytelling for the Metal Genesis universe.

Meanwhile, Playdate with Winnie the Pooh highlights Lemon Sky’s strength in long-form animation. In Season 2, we used Maya across every stage, from modeling and rigging to fur grooming, animation, lighting, and rendering with the included Autodesk Arnold plugin. To achieve the high-standard aesthetics, we needed a pipeline that provided full control and flexibility in both rendering and compositing. Arnold was the ideal choice, offering extensive render data for compositing and minimizing the need for re-rendering shots during fixes. This approach allowed the team to achieve both consistency and efficiency while maintaining the warm, high-quality look expected of a beloved family series.

Q: What would you say is Lemon Sky’s “superpower”?

A: Our strength lies in adaptability, meeting the diverse needs of clients across animation and game development. Over the past decade, we’ve partnered with leading names such as DreamWorks, Nickelodeon, Disney, Scopely, Blizzard, Capcom, and Insomniac Games, integrating seamlessly into their unique pipelines to deliver both artistic and technical excellence. From traditional series to complex game productions, our team thrives in any workflow. Always evolving, we continue to explore real-time rendering and the OpenUSD pipeline to stay ahead of industry innovations and push creative boundaries.

Q: Can you give us an overview of the creative vision behind the Metal Genesis cinematic?

A: The Metal Genesis cinematic was produced entirely in-house by Lemon Sky Studios, pushing creative boundaries with bold new mech designs and high-intensity battle sequences. Alongside the visual storytelling, the project also served as an opportunity to explore and refine our production pipeline, specifically the integration between Maya and Unreal Engine.

The narrative centers around epic battles featuring Gattai-style mechanics, where multiple machines combine to form a single, larger, and more powerful robot. The cinematic blends realistic human characters with stylized mecha elements, creating a visually striking fusion of grounded emotion and large-scale mechanical action.

Q: What were the technical breakthroughs in producing Metal Genesis?

A: The modeling, rigging, and animation stages were crafted in Maya, while the scene assembly and lighting took place in Unreal Engine, where the final cinematic was produced.

The seamless data exchange between the two platforms allowed us to harness the strengths of each, combining Maya’s precision with Unreal Engine’s real-time power.

Using Unreal Engine 5, we achieved film-quality lighting and materials entirely in real time, powered by Lumen and high-density assets that bring every detail to life without traditional baking. For the cinematic trailer, our team rebuilt a war-torn city from the ground up inside the engine, capturing every flicker of light and trace of destruction with stunning precision. The result is a fusion of artistry and technology, dramatically reducing production time while delivering visuals worthy of the big screen.

Q: Can you walk us through the creation process for the giant mechs?

A: This project gave our team the chance to bring a long-standing creative idea to life while exploring new ways to merge artistry and technical innovation. Drawing inspiration from the classic Gattai concept—where multiple mechs merge into one powerful machine—we approached the theme for its nostalgic appeal and the creative and technical challenges it presented.

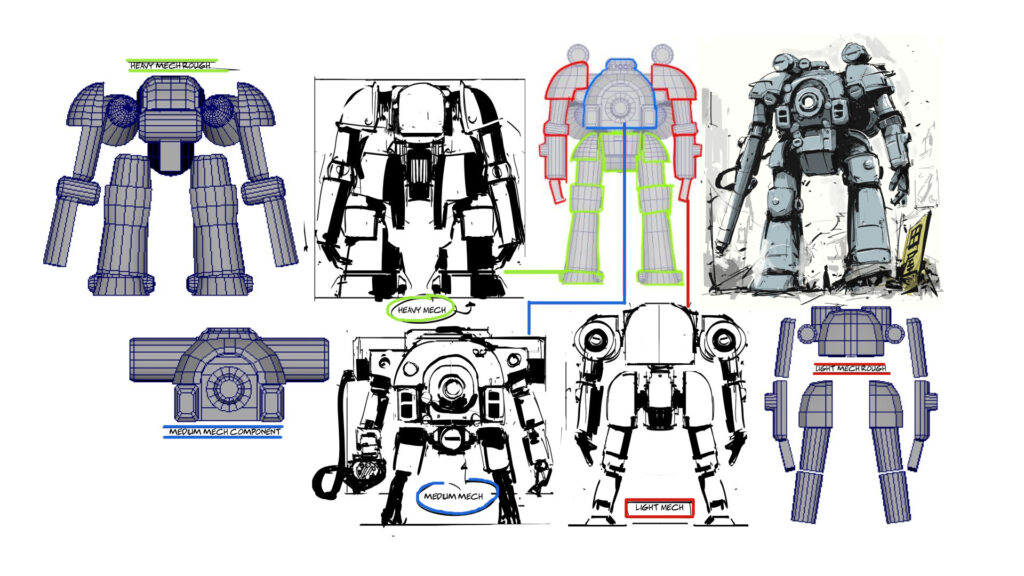

We began by designing a heavy-duty industrial mech faction, defined by steel plating, exposed pistons, and powerful hydraulic systems engineered for brute mechanical strength rather than finesse.

In contrast, the second faction features a music-inspired, retro aesthetic, blending vibrant color palettes, rhythmic design motifs, and sleek contours that emphasize style over sheer power. This mech line was envisioned as an artistic counterpoint to the industrial faction—graceful in motion, expressive in form, and driven by creativity rather than force.

From an animation standpoint, one of the core challenges was developing the transformation logic, ensuring each mech functioned believably as an individual unit while combining seamlessly into a larger form. Balancing mechanical plausibility with visual spectacle became a defining achievement of the project.

Q: How did you approach the creation of human characters like Eka?

A: While the workflow for creating human characters like Eka followed a similar production pipeline as the mechs, covering modeling, texturing, rigging, and shading, the challenges were fundamentally different. With human characters, the focus shifts to believability and subtle realism. Even minor deviations in anatomical proportions can break immersion, so we paid close attention to skeletal structure, muscle flow, and overall body balance.

We approached every aspect of the character design with precision, from the texture of the gloves to the detailing on the boots, to maintain visual consistency and realism. The face was particularly demanding. We sculpted it down to the pore level to ensure it could withstand close-up cinematic shots while retaining emotional expressiveness and realism.

Integrating Eka into Unreal Engine presented its own set of challenges. While MetaHuman provided a strong foundational framework, especially for facial topology and rigging, we customized the assets significantly. We developed bespoke materials and shaders to push the realism beyond the default MetaHuman look and tailored them to fit the stylized-yet-grounded aesthetic of our cinematic world.

MetaHuman also enabled us to capture and apply realistic facial performance through facial motion capture, giving Eka a more expressive and lifelike presence. Combining that data with our custom facial rig and shader work allowed us to bridge the gap between technical execution and emotional storytelling.

Q: How do you use MotionBuilder and Maya together in your animation pipeline?

A: Maya served as the backbone of our production pipeline, providing a stable, reliable environment for modeling, rigging, and animation. From the outset, we aimed to explore a more advanced pipeline by integrating Maya with Unreal Engine. Since we were relatively new to Unreal at the time, having Maya as our core platform helped ease the transition, thanks to its mature toolset and our existing library of custom setups from previous projects.

Maya was used to build clean, production-ready assets and animations. Its precision in rigging and animation layout ensured a solid foundation before moving into Unreal for real-time rendering. This setup allowed us to maintain high asset quality while leveraging Unreal Engine’s rendering power and real-time iteration capabilities.

To enhance realism in character performance, we implemented motion capture as part of our animation workflow. Our animators performed the movements themselves, capturing the performances using MotionBuilder.

MotionBuilder’s support for live mocap streaming made it ideal for real-time previewing within the digital environment, allowing the team to immediately evaluate performances on the character rigs.

This real-time feedback loop significantly reduced turnaround time, as animators could adjust performances on the spot, dial in the style we were aiming for, and minimize extensive post-cleanup.

We also leveraged HumanIK (HIK) for rig compatibility, along with seamless file interchange between MotionBuilder and Maya. This interoperability allowed for efficient retargeting and cleanup of mocap data in MotionBuilder, followed by refinement and final animation polish in Maya, without technical bottlenecks.

By combining Maya and MotionBuilder, we created a streamlined, performance-driven pipeline that enabled the team to focus less on technical hurdles and more on delivering expressive, cinematic-quality animation.

Q: Does your team become a well-oiled machine where every animation project feels routine, or does each one feel like the first?

A: While experience has made our workflow more efficient, each project still brings its own unique challenges. To manage this, we’ve developed a range of custom Maya tools that automate key tasks in rigging, animation, and rendering, significantly reducing production time. This has allowed us to build a scalable and adaptable pipeline that maintains creative flexibility while supporting fast-paced episodic schedules or larger, more complex productions.

Q: How are you using open standards or other technologies to improve cross team and cross-studio efficiency?

A: We’re actively leveraging open standards such as OpenUSD (Open Universal Scene Description), VDB, and Alembic to enhance interoperability across teams and partner studios. These formats enable us to share complex data, including geometry, animation, and volumetrics, while preserving fidelity and ensuring consistency across different software environments.

By standardizing on these technologies, we’ve not only streamlined asset exchange but also reduced pipeline bottlenecks, making collaboration more efficient and scalable across departments and external partners.

Q: What was the most interesting aspect of working on Playdate with Winnie the Pooh?

A: One of the most exciting aspects of this project was bringing Winnie the Pooh to life in 3D with plush-like fur. As the show was commissioned by Disney Junior and produced by OddBot, with Lemon Sky serving as the animation partner, this marks the first time Disney has depicted Winnie the Pooh with realistic fur in 3D animation. The plush-style aesthetic was a creative direction proposed by OddBot and brought to life through close collaboration among all partners.

To preserve the charm and visual language of the original 2D animation, the characters are animated on 2s, giving them a hand-drawn feel. Meanwhile, the camera moves on 1s, creating a playful yet polished aesthetic that blends traditional appeal with the fluidity and depth of modern 3D animation.

Q: What were the key technical challenges in producing Playdate with Winnie the Pooh, and how were they addressed?

A: One of the biggest technical challenges was achieving high-quality visuals, such as realistic fur and lush environments, while maintaining performance and consistency across episodes. We used Maya’s XGen Interactive Grooming to create accurate, plush-like fur with smooth viewport playback. For dense foliage, the MASH system combined with render proxies allowed us to populate large environments efficiently without overloading the render farm.

Q: What made Maya the ideal tool?

A: Maya was the ideal choice for Playdate with Winnie the Pooh due to its powerful and well-integrated toolset for animation, rigging, grooming, and rendering with its Arnold plugin, which are critical for delivering high-quality, stylized 3D character animation within the constraints of an episodic production pipeline.

To ensure visual consistency across episodes, we combined Maya’s core features with a suite of internal tools, including light exporters, advanced preset readers and writers, and custom farm submission tools. Additionally, Autodesk Flow Production Tracking played a crucial role in streamlining communication and review processes with the client, helping us maintain clarity and consistency throughout production.

Q: What is the most important technological strength a studio can have today?

A: While exceptional talent remains the foundation of any successful studio, it’s the combination of skilled artists and a strong project management team that truly brings creative visions to life. At the same time, technological adaptability has become just as critical. The industry evolves rapidly, from real-time engines to AI-assisted workflows, and the ability to integrate these tools into production pipelines allows a studio to stay competitive, efficient, and forward-thinking. In our experience, the most successful studios are those that pair creative excellence with a culture of continuous technological evolution, embracing change rather than resisting it.

Learn more about Lemon Sky Studios.

Get all the tools you need, including Maya, MotionBuilder, and x5 Arnold subscriptions, bundled in the Autodesk Media & Entertainment Collection.